Introduction to Pente AI

Approximating Optimal Solutions to Massively Complex, Deterministic Games

What do Google Maps, many board games, and social media websites have in common? These are a small subset of things that have parts that can be modeled as "graphs". For anyone who is not familiar with computer science or discrete math lingo, the word "graph" here does not refer to a visualization or plot, but instead it refers to a collection of connected data points, where each data point is called a "node" and each connection between nodes is called an "edge".

For example, with Google Maps, nodes may represent road intersections and edges may represent the streets or highways connecting those intersections. With a board game like Chess, though, the nodes may represent game states (i.e. a snapshot of where all pieces are at or before a player takes a turn) and the edges may be the moves taken to get from one game state to another. With social media websites, the nodes in the graph may be users, and the edges may represent some sort of connection between users, whether that connection represents one user following another, or a user interacting with someone else's posts, or any other form of connection or interaction between two users.

Imagine you're visiting New York City, and you want to travel from your hotel to the nearest grocery store, but you don't know where the store is or the most efficient way to get there. So you open Google Maps and ask it to give you directions, but it tries to route you from New York City to Dallas to San Francisco, through three small towns on the west coast, and back to the grocery store that was three blocks away from your hotel. Obviously, this route is a terrible route, given that we want to minimize the time spent getting to the grocery store!

Let's generalize the issue. We have some graph, and some optimization goal. The goal could be to minimize travel time between two points (for travel planning), maximize the number of user interactions (for social media websites), or maximize the number of games won (when exploring the graph representation of a board game). But the number of paths to choose from is unfathomably large, and we can't possibly explore all possible paths through the graph to find the set of paths that best achieves our goal, so we have to find some algorithm to make clever guesses about which path will best optimize our chosen goal.

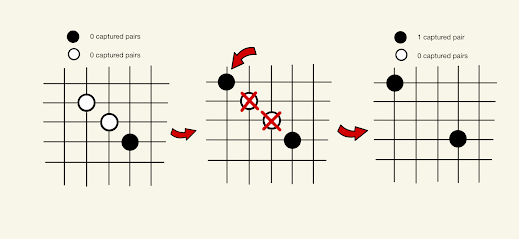

We will explore this problem on a sort of toy example by working to train a neural network to play two player Pente by efficiently exploring the graph of possible game states. Pente is much like TicTacToe, but the board is 19 by 19 instead of 3 by 3, and there can be two to four players. The goal is to either get five pieces in a row or capture five pairs of opponents' pieces (see Figures 1 and 2 for demonstration of how capturing pairs does and does not work). Much like the other types of graphs mentioned, the graph that represents game states and moves for Pente is much too large to completely explore given our current computing power limits, but the fact that Pente has simple rules makes the game easy to write a simulator for.

|

| Figure 1: Black captures one white pair |

|

| Figure 2: Black does not capture one white pair |

Why is this project important, though? Perhaps as you read this, you think that trying to create clever heuristics for playing a board game like Pente, Chess, Go, or other board games is simply an expression of eccentricity with no actual uses in the real world. This could not be more wrong. Better graph exploration methods (that are sometimes discovered or invented for fun purposes like making an AI to play Pente, Chess, or Go) are useful to make better internet search engines, music recommendations, self-driving cars, directions to the grocery store, and so many more uses. Improving graph exploration methods directly impacts all of these aspects of normal people's everyday lives.

The following questions are particularly relevant:

- Is it currently computationally feasible for a college student with fairly limited computing power to replicate a simpler version of AlphaZero?

- Is there a better way than Monte Carlo Tree Search to balance exploration and exploitation for reinforcement learning in deterministic and discrete games?

- How large of a neural network is needed to learn to play a game like Pente well?

- How quickly can such a neural network be trained?

- Is there a way to decrease the size of the network needed and decrease the training time needed?

Comments

Post a Comment